Insights from the panel ‘Research assessment under scrutiny – towards more holistic and qualitative-oriented systems?’

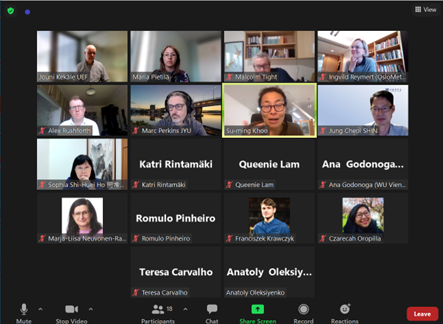

Our YUFERING team at UEF took the opportunity to organize a panel discussion on the 34th Annual CHER Conference. CHER is the Consortium of Higher Education Researchers.

The conference took place online on 1–2 September 2022. The panel had the title ‘Research assessment under scrutiny – towards more holistic and qualitative-oriented systems?’ Although time has already passed since the conference was organized, the topic is not outdated – quite the opposite (see, e.g., CoARA).

The panelists included Ingvild Reymert, Alex Rushforth, and Malcolm Tight. Ms. Reymert is the head of studies and associate professor at Oslo Metropolitan University, and researcher at the Nordic Institute for Studies of Innovation, Research and Education (NIFU). Mr. Rushforth is assistant professor at CWTS, Leiden University. Mr. Tight is Professor of Higher Education at Lancaster University. The UEF team – Jouni Kekäle, Maria Pietilä, and Katri Rintamäki – acted as the hosts of the panel.

The panel was connected to the YUFERING project of the YUFE university alliance.

Key problems in research assessment

Our first discussion topic centered around the key problems in research assessment at the individual level, European reform process on research assessment as a context. We asked the panelists to reflect on why the reform processes had recently been accelerated and what the key drivers for change might be. We also encouraged the panelists to consider if academic recruitment and individual-level research assessment required substantial changes.

A lot of the discussion revolved around national and institutional differences. Professor Tight reminded not to orient the focus on research-led universities only, as the missions of higher education institutions vary. Associate professor Reymert reflected on assessment policies and practices in the context of Norway. According to her research, researchers are already evaluated in diverse ways at Norwegian universities. Although there has been an increased use of bibliometrics in academic recruitment, bibliometrics do not seem to determine the processes. Assistant professor Rushforth reminded the audience that responsible metrics is not a new thing. Recently, there has been interesting re-packaging of the topic around the CoARA (Coalition for Advancing Research Assessment). CoARA brings together the topics of responsible metrics, open science, and research integrity.

Pros and cons of peer review

The discussion then moved to assessment methods in academic recruitment and promotion processes. (Over)reliance on problematic indicators has been a common target for criticism in the dominant research assessment systems.

However, qualitative assessment, such as peer review, has its reported biases and is more laborious for institutions and researchers. Associate professor Reymert noted that in situations where there are a lot of competent candidates, universities may continue to need bibliometrics during the first screening of candidates. Assistant professor Rushforth stated that while assessment by peers should be central, we should also acknowledge its problems. For example, based on the experiences of editing higher education journals, professor Tight noted that peer reviewing may sometimes be subjective. There are also limits to researchers’ workload, which makes it problematic to demand them more peer reviewing.

Acknowledging societal engagement in assessment

The last part of the discussion was about recognizing researchers’ societal engagement and outreach activities in assessment. Associate professor Reymert noted that especially early-career researchers working on fixed-term positions have incentives to focus on research to be able to compete for next positions. Increasing the number of permanent positions in academia could offer academics more opportunities to be active in the society. From the perspective of universities, this might however require more stability in the level of public funding.

Some universities, such as the Erasmus University, have created sets of impact indicators. The panelists emphasized that better recognition of researchers’ diverse roles is important. However, it is not easy to compose simple indicators that would not favor certain activities over others. Reflecting on the experiences from the Research Excellence Framework in the United Kingdom, professor Tight remarked that some activities or aspects of societal engagement are not as easy to express or verify as others.

Insights from the audience

The general discussion centered around hyper competition, overproduction of doctorates and the scarcity of academic positions, and the power of numbers and metrics in decision-making (e.g., simplicity is often called for and indicators may be treated as objective information).

We wish to thank the panelists and the whole group for active discussion!

Maria, Jouni, and Katri